Co-Adaptive Interfaces

The problem: Chat UIs are brittle: your intent, taste and goals stay locked in an opaque, un-portable context window.

The idea: A co-adaptive editor turns every accept/reject diff into a versioned, ownable “preference capsule” you can drag across tasks, apps and models.

How the loop works: Each binary choice updates a living policy that shrinks bits-per-decision and grows a model of you.

Why this beats prompting: A single in-context choice is the cleanest signal.

Taste: is A>B given context C—the atomic unit the system generalises from.

Portability: Preference capsules are auditable, diff-able, open-source-able; resolving merge conflicts becomes a research problem worth having.

Against Context rot: Explicit versioned goals kill the “revive the prompt” tax that eats 30-50 % of long sessions.

Coupling: A lightweight dependency map shows blast-radius before you commit.

Co-Adaption: The editor keeps rewriting itself until the only thing left to do is exactly what you wanted in the first place.

Chat interfaces are a brittle status quo. Prompts stay static. What the model thinks you want, what you could actually use, what you’re quietly trying to achieve stays locked inside an opaque context window you can’t audit, port, or merge.

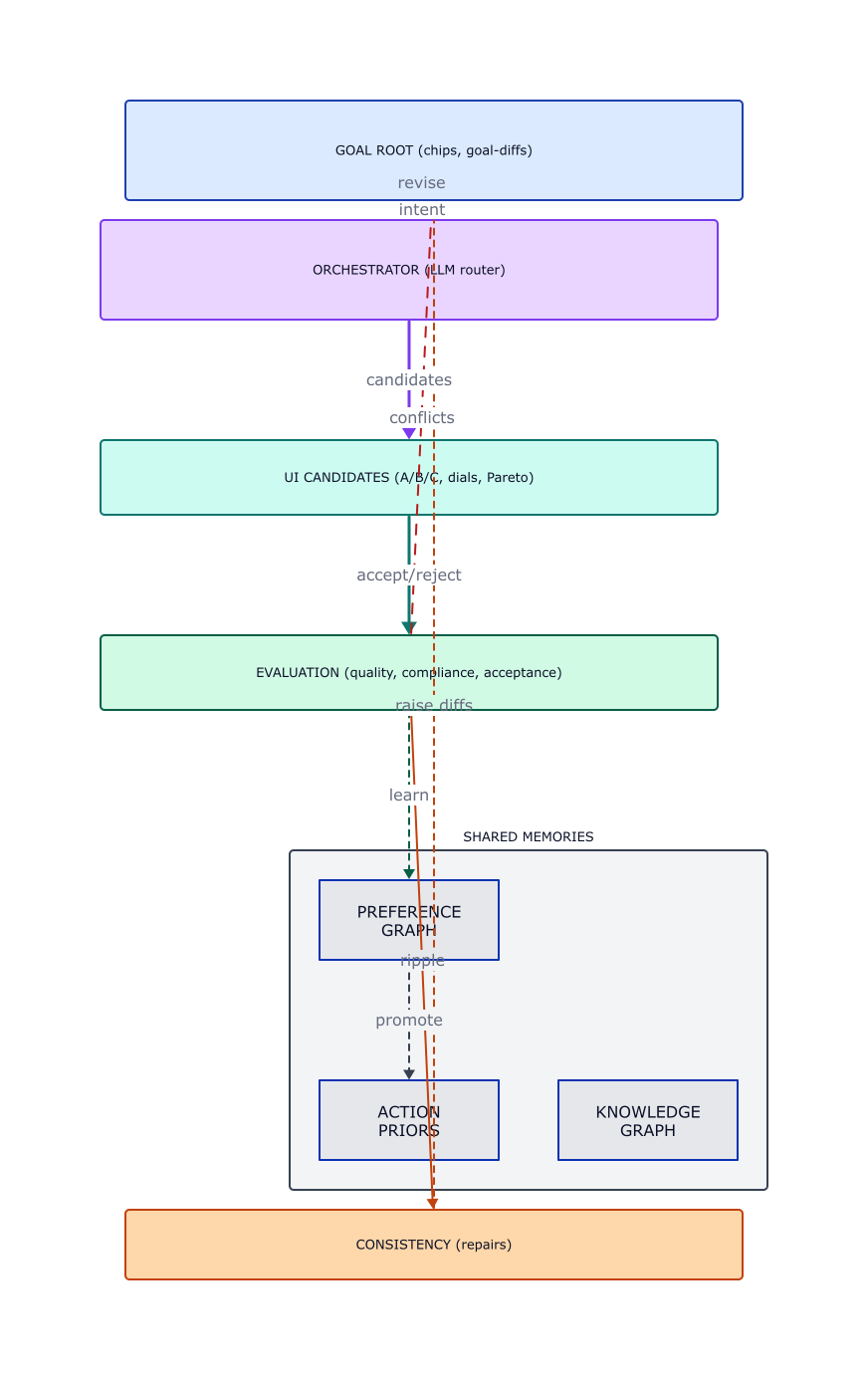

A good generative tool learns what you like (preferences), what you can use (knowledge), and what you’re trying to achieve (goals), and then repairs inconsistencies when they pop up. My idea for a co-adaptive editor is where every action informs the next change to the content, the representation (UI) and … actions. Every diff simultaneously rewrites the content (target) and its own interface policy, so the UI keeps shrinking the bits per choice while growing a model of you. The best signal you can give a system isn’t a paragraph of instructions; it’s a tiny choice in context.

You should own every action you make and you should be able to port and reuse them as data across tasks, apps and models. Right now, preferences stay implicit and the trade-offs are mostly hidden to you. You don’t even know what the model thinks you want.

Every edit you perform feeds a living loop that rewrites itself. It runs off your taste.b b I define taste as A>B given Context C. This is the atomic unit of learning that the system can observe and generalize from. There can be thousands of ad-hoc UI components and interface compositions. A normal chat ui is the last resort when the user is stuck in a local minima or the UI isn’t expressive enough. I predict this will happen when upper level nodes like goals or ideas change starkly. Models get rewarded for aesthetics, elegance and creativity within the constraints.

The more you iterate, the tighter the loop gets: contrastive previews get faster, the deltas get smaller and more legible, and the editor’s policy converges on your private trade-offs. There are fewer bits per decision, clearer choices and reusable proxies for alignment. The alignment artifact becomes portable, auditable and mergable. People can open source them. Resolving preference merge conflicts between organizations and people will be a cool area of research.

Goals change, especially when they make contact with reality. To me it’s low hanging fruit to help the user understand how his actions reflect upon what the system thinks his goals actually are. When you’re in a long chat with a model the entire conversation is the context that does this implicitly. Later instructions have more weight but obviously it should verify the change and delete the old instructions from its memory. This helps with context rotc c Models get distracted and MUCH dumber after the first few hundred tokens. Making goals explicit and versioned means you can verify and refresh the alignment without re-explaining everything.. If I look back at my chat log over the last year about 1/3 to 1/2 of my time is reviving and resetting context and making the implicit explicit again. Companies have tried this but it has been an afterthought, badly implemented and generally not portable to other system … never mind other modalities.d d I actually believe there’s transfer and transitivity between the senses. Preference patterns learned in one modality (text editing) could inform interactions in others (image generation, code editing).

Another simple idea is to be explicit about conceptual coupling. Structural coupling is easy to see ( children are below parents). But semantic coupling is where most the remedial editing effort goes. Practically, we can display a lightweight dependency map (headings, refs, claims, variables) or count affected nodes per edit: when it’s high there’s batch refactor/repair or the user might just decide to not care and relax that particular constraint. Unlike great software, great essays often are highly coupled. Why not make the editor work with that.

The result is a loop that learns what you like (preferences), what you can use (knowledge), and what you’re trying to achieve (goals), and then repairs inconsistencies as they arise.