The Business of Extracting Knowledge from Academic Publications

I meant to post this much earlier but have been fighting with neurological longhaul covid symptoms for most of the year.

You can comment on this post on Twitter or HN

Psychoanalysis of a Troubled Industry

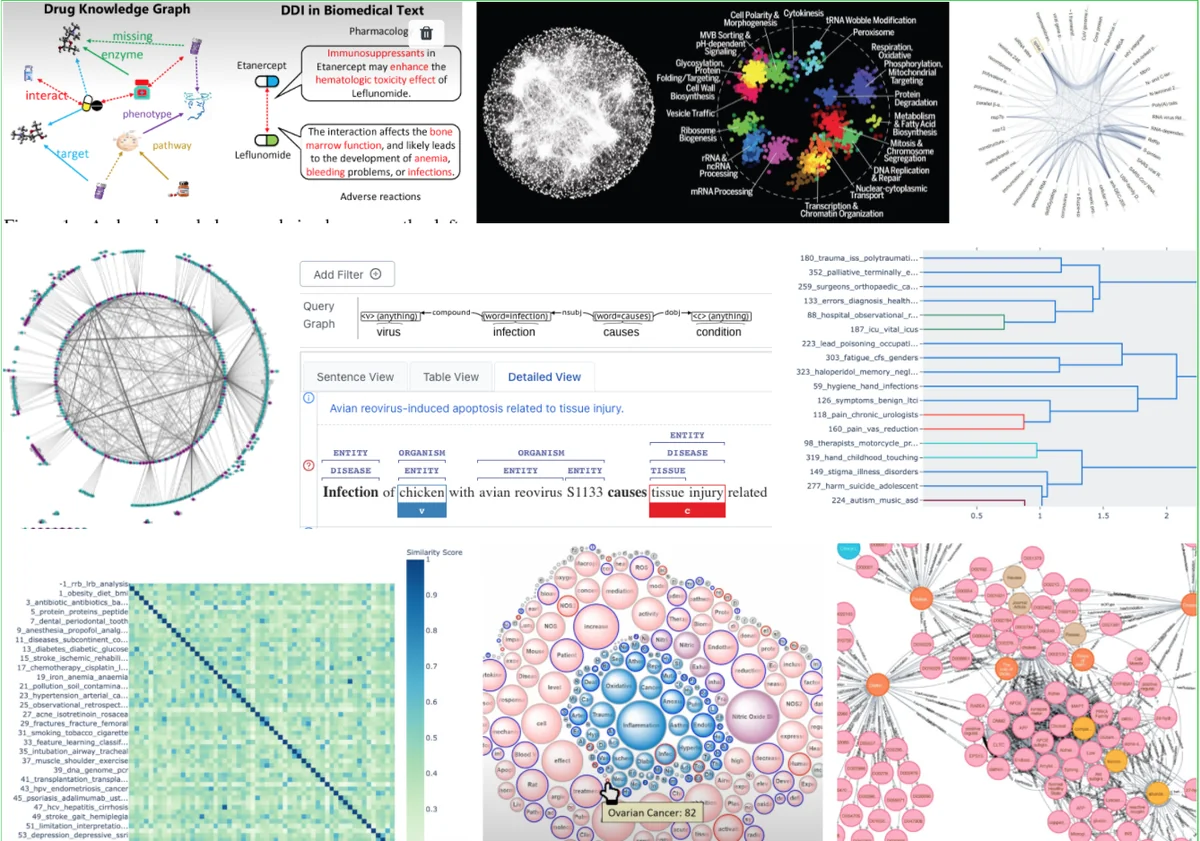

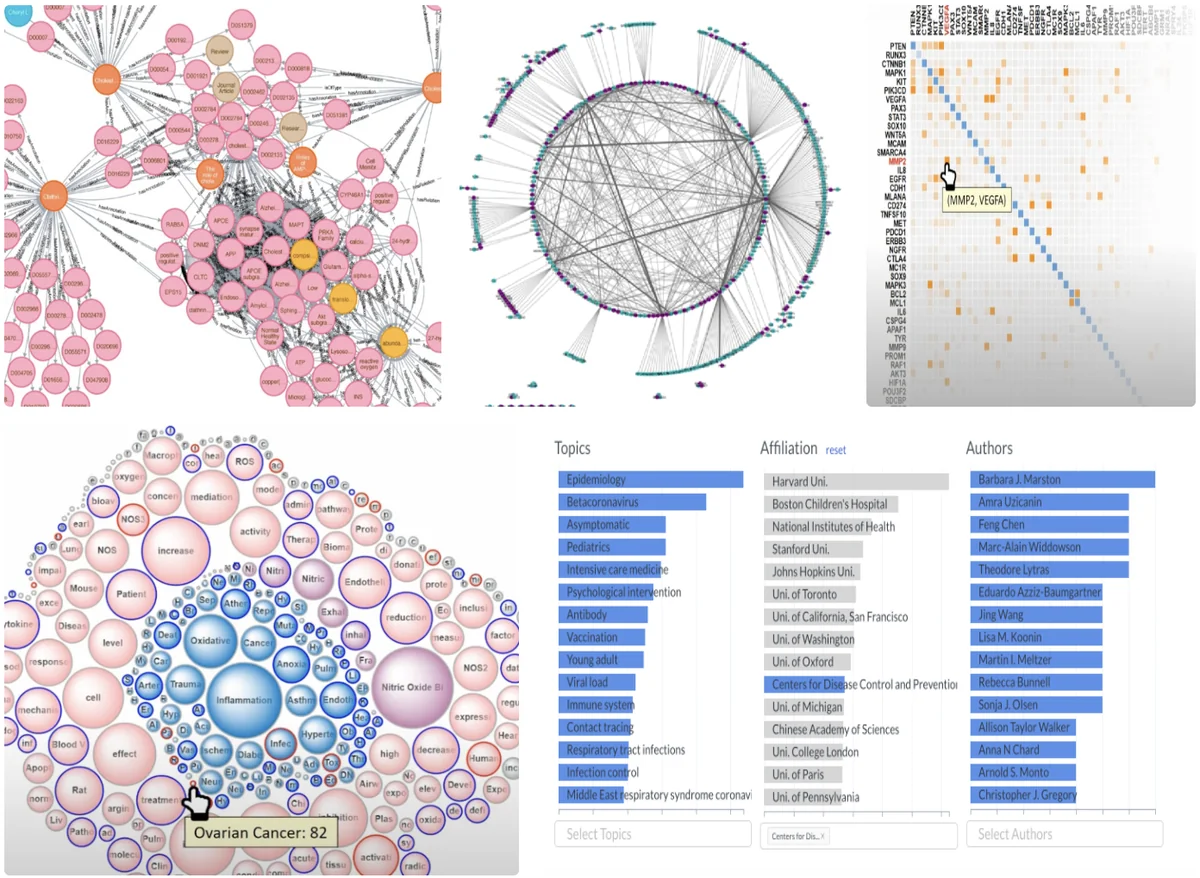

Atop the published biomedical literature is an evolved industry around the extracting, semantic structuring and synthesizing of research papers into search, discovery and knowledge graph software applications. The usual sales pitch goes something like this:

Generate and Validate Drug Discovery Hypotheses Faster Using our Knowledge Graph. Keep up with the scientific literature, search for concepts and not papers, and make informed discovery decisions.

Find the relevant knowledge for your next breakthrough in the X million documents and Y million extracted causal interactions between genes, chemicals, drugs, cells…

Our insight prediction pipeline and dynamic knowledge map is like a digital scientist.

Try our sentence-level, context-aware, and linguistically informed extractive search system.

Or from a grant application of yours truly:

A high-level semantic search engine and user interface for answering complex, quantitative research questions in biomedicine.

You get the idea. All the projects above spring from similar premises:

- The right piece of information is “out there”

- If only research outputs were more machine interpretable, searchable and discoverable then “research” could be incrementally automated and progress would go through the roof

- No mainstream public platform (eg. arxiv, pubmed, google scholar) provides features that leverage the last decade’s advances in natural language processing (NLP) and they do not improve on tasks mentioned in 2.

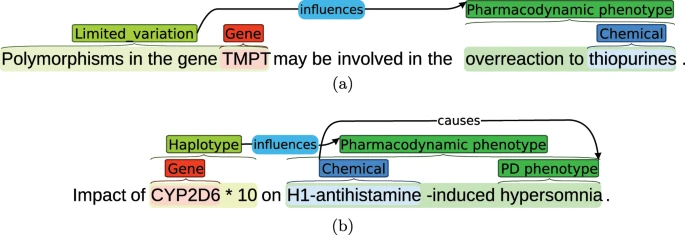

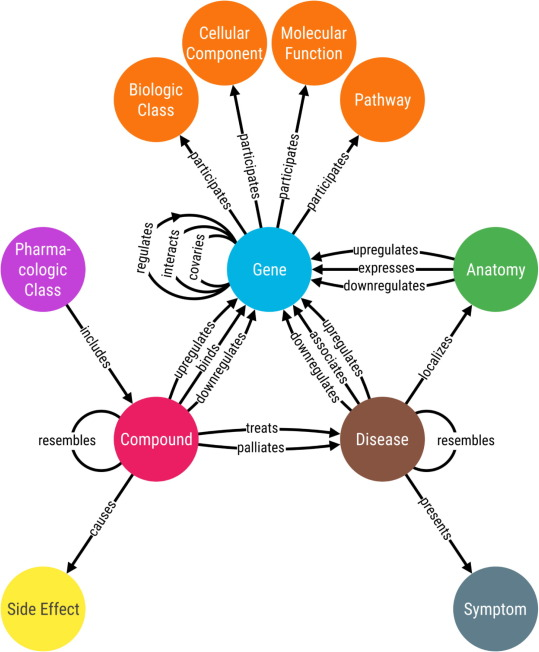

Which leads them to conclude: Let’s semantically annotate every piece of literature and incorporate it into a structured knowledge base that enables complex research queries, revealing undiscovered public knowledge by connecting causal chains (swanson linking) or generating new hypotheses altogether.

It really does sound exciting and VCs and governments like to fund the promise of it. Companies get started, often get a few pivot customers, end up as software consultancies, or silently fail. In rare cases they get acquired for cheap or survive as a modest ”request a demo” B2B SaaS with 70% of the company in sales with unit economics that lock them in to only sell to enterprises and never to individuals 0.

Meanwhile big players with all the means to provide a category leading platform have either stopped developing such an effort altogether (Google Scholar), shut it down and nobody cares (ie. Microsoft Academic Search) or are unable to commercialize any of it (AllenAI with SemanticScholar, Spike etc.).

Let’s take AllenAI as an example. AllenAI is a world-renowned AI research organization with a focus on NLP and automated reasoning. They sponsor a startup incubator to spin-off and commercialize their technologies. The AllenNLP platform and SemanticScholar is used by millions of engineers and researchers. And unlike most big AI research groups they also build domain-focused apps for biomedicine (like supp.ai and Spike).

And yet, despite all of that momentum, they can’t commercialize any of it:

We have been looking at opportunities to commercialize the technologies we developed in Semantic Scholar, Spike, bioNLP, etc. The challenge for us so far is in finding significant market demand. I’m curious if you have found an angle on this ? […]

This was an email reply I received from a technical director at the AllenAI institute. At that point, I had already spent months building and trying to sell biomedical NLP applications (bioNLP) to biotechs which had made me cynical about the entire space. Sure, I might just be bad at sales but if the AllenAI with 10/10 engineering, distribution and industry credibility can’t sell their offerings, then literature review, search and knowledge discovery are just not that useful…

When I then looked at the SUM of all combined funding, valuations, exits of NLP companies in the semantic search / biomedical literature search / biomedical relation extraction space I could find it was less than the valuation of any single bigger bioinformatic drug discovery platform (ie. Atomwise, Insitro, nFerence). Assuming the market is efficient and not lagging, it shows how little of a problem biomedical literature search and discovery actually is.

Just to clarify: This post is about the issues with semantic intelligence platforms that predominantly leverage the published academic literature. Bioinformatic knowledge apps that integrate biological omics data or clinical datasets are actually very valuable (ie. Data4cure), as is information extraction from Electronic Health Records [EHRs] (like nFerence, scienceIO) and patent databases.

My Quixotic Escapades in Building Literature Search and Discovery Tools

Back in March 2020 when the first covid lockdowns started I evacuated San Francisco and moved back to Austria. I decided to use the time to get a deeper understanding of molecular biology and began reading textbooks.

Before biology, I had worked on text mining, natural language processing and knowledge graphs and it got me thinking … could you build something that can reason at the level of a mediocre biology graduate?

After a few weeks of reading papers in ontology learning, information extraction and reasoning systems and experimenting with toy programs, I had some idea of what’s technologically possible. I figured that a domain-focused search engine would be much simpler to build than a reasoner/chatbot and that the basic building blocks are similar in any case 0.5.

I wrote up what I had in mind, applied to Emergent Ventures and was given a grant to continue working on the idea.

At that time I also moved to London as an Entrepreneur First (EF) fellow. I made good friends in the program and teamed up with Rico. We worked together for the entirety of the three month program. During that time we prototyped:

a search engine with entity, relation and quantitative options: A user could go detailed, expressive queries like:

< studies that used gene engineering [excluding selective evolution] in yeast [species A and B only] and have achieved at least [250%] in yield increase >

< studies with women over age 40, with comorbidity_A that had an upward change in biomarker_B >

They’d tell us in English and we’d translate it into our query syntax

This collapses a lot of searches and filtering into one query that isn’t possible with known public search engines but we found out that it’s not often in a biotechs lifecycle that questions like this need researching. To get a notion of ratios: one search like this could return enough ideas for weeks of lab work

a query builder (by demonstration): A user would upvote study abstracts or sentences that fit their requirements and it would iteratively build configuration. In a sense it was a no-code way for biologists to write interpretable labeling functions while also training a classifier (a constrained case of program synthesis)

We built this because almost no biologist could encode or tell us exactly which research they wanted to see but they ”know it when I see it”

A paper recommender system (and later claim/sentence/statement level) that, in addition to academic literature, included tweets, company websites and other non-scholarly sources (lots of scientific discourse is happening on Twitter these days)

A claim explorer that takes a sentence/paragraph and lets you browse through similar claims

A claim verifier that showed sentences that confirm or contradict an entered claim sentence It worked ok-ish but the tech is not there to make fact checking work reliably, even in constraint domains

And a few more Wizard of Oz experiments to test different variants and combinations of the features above

At that point, lots of postdocs had told us that some of the apps would have saved them months during their PhD, but actual viable customers were only moderately excited. It became clear that this would have to turn into another B2B SaaS specialized tool with a lot of software consulting and ongoing efforts from a sales team …

We definitely did not want to go down that route. We wanted to make a consumer product for consumers, startups, academics or independent researchers and had tested good-enough proxies for most ideas we thought of as useful w.r.t. the biomedical literature. We also knew that pivoting to Electronic Health Records (EHRs) or patents instead of research text was potentially a great business, but neither of us was excited to spend years working on that, even if successful.

So we were stuck. Rico, who didn’t have exposure to biotech before we teamed up, understandably wanted to branch out and so we decided to build a discovery tool that we could use and evaluate the merits of ourselves.

And so we loosened up and spent three weeks playing around with knowledge search and discovery outside of biomed. We built:

a browser extension that finds similar paragraphs (in essays from your browser history) to the text you’re currently highlighting (when reading on the web) A third of the time the suggestions were insightful, but the download-retrain-upload loop for the embedding vectors every few days was tedious and we didn’t like it enough to spend the time automating

similar: an app that pops up serendipitous connections between a corpus (previous writings, saved articles, bookmarks …) and the active writing session or paragraph. The corpus, preferably your own, could be from folders, text files, blog archive, a Roam Research graph or a Notion/Evernote database. This was a surprisingly high signal to noise ratio from the get go and I still use it sometimes

a semantic podcast search (one version for the Artificial Intelligence Podcast is still live)

an extension that after a few days of development was turning into Ampie and was shelved

an extension that after a few days of development was turning into Twemex and was shelved

Some of the above had promise for journalists, VCs, essayists or people that read, cite and tweet all day, but that group is too heterogeneous and fragmented and we couldn’t trust our intuition building for them.

To us, these experiments felt mostly like gimmicks that, with more love and industry focus 1.5, could become useful but probably not essential.

Now it was almost Christmas and the EF program was over. Rico and I decided to split up as a team because we didn’t have any clear next step in mind 1. I flew to the south of Portugal for a few weeks to escape London’s food, bad weather and the upcoming covid surge.

There, I returned to biomed and tinkered with interactive, extractive search interfaces and no-code data programming UIs (users can design labeling functions to bootstrap datasets without coding expertise)

Ironically, I got covid in Portugal and developed scary neurological long-hauler symptoms after the acute infection. Except for a handful of ‘good days’, spring and summer came and went without me being able to do meaningful cognitive work. Fortunately, this month symptoms have improved enough to finish this essay.

Fundamental Issues with Structuring Academic Literature as a Business

As I said in the beginning:

extracting, structuring or synthesizing “insights” from academic publications (papers) or building knowledge bases from a domain corpus of literature has negligible value in industry

To me the reasons feel elusive and trite simultaneously. All are blatantly obvious in hindsight.

Just a Paper, an Idea, an Insight Does Not Get You Innovation

Systems, teams and researchers matter much more than ideas. 2

It wasn’t always like that. In the 19th century ideas (inventions) were actually the main mechanism for innovation. From Notes on The changing structure of American innovation:

The period from 1850-1900 could be described as the age of the inventor. During this period inventors were the main mechanism for creating innovations. These folks would usually sell these patents directly to large companies like the telegraph operators, railroads, or large chemical companies. The companies themselves did little R&D and primarily operated labs to test the patents that inventors brought them

But the complexity threshold kept rising and now we need to grow companies around inventions to actually make them happen:

DuPont bought the patent for creating viscose rayon (processed cellulose used for creating artificial silk and other fibers.) However, Dupont was unable to replicate the process successfully and eventually had to partner with the original inventors to get it to work

[…] making systems exponentially more valuable than single technologies

That’s why incumbents increasingly acqui-hire instead of just buying the IP and most successful companies that spin out of labs have someone who did the research as a cofounder. Technological utopians and ideologists like my former self underrate how important context and tacit knowledge is.

And even if you had that context and were perfectly set up to make use of new literature … a significant share of actionable, relevant research findings aren’t published when they’re hot but after the authors milk them and the datasets for a sequence of derivative publications or patent them before publishing the accompanying paper (half the time behind paywalls) months later.

In the market of ideas information asymmetries turn into knowledge monopolies.

As mentioned in Market Failures in Science:

Scientists are incentivized to, and often do, withhold as much information as possible about their innovations in their publications to maintain a monopoly over future innovations. This slows the overall progress of science.

For example, a chemist who synthesizes a new molecule will publish that they have done so in order to be rewarded for their work with a publication. But in the publication they will describe their synthesis method in as minimal detail as they can while still making it through peer review. This forces other scientists to invest time and effort to reproduce their work, giving them a head-start in developing the next, better synthesis.

All that is to say: discovering relevant literature, compiling evidence, finding mechanisms turns out to be a tiny percentage of actual, real life R&D

Contextual, Tacit Knowledge is not Digital, not Encoded or just not Machine-Interpretable yet

Most knowledge necessary to make scientific progress is not online and not encoded. All tools on top of that bias the exploration towards encoded knowledge only (drunkard’s search), which is vanishingly small compared to what scientists need to embody to make progress.

Expert and crowd-curated world knowledge graphs (eg. ConceptNet) can partially ground a machine learning model with some context and common sense but that is light years away from an expert’s ability to understand implicature and weigh studies and claims appropriately.

ML systems are fine for pattern matching, recommendations and generating variants but in the end great meta-research, including literature reviews, comes down to formulating an incisive research question, selecting the right studies and defining adequate evaluation criteria (metrics) 2.5.

Besides, accurately and programmatically transforming an entire piece of literature into a computer-interpretable, complete and actionable knowledge artifact remains a pipe dream.

Experts have well defined, internalized maps of their field

In industry, open literature reviews are a sporadic, non-standardized task. Professionals spend years building internal schemas of a domain and have social networks for sourcing (ie. discovery) and vetting relevant information if needed. That’s why the most excited of our initial user cohorts were graduate and PhD students and life science VCs, not professionals who specialized and actively work in biotech or pharma companies.

The VC use case of doing due diligence was predominantly around legal and IP concerns rather than anything that a literature review might produce. The conceptual clearance or feedback on the idea itself was done by sending it to a referred expert in their network, not by searching the literature for contradictions or feasibility.

Scientific Publishing comes with Signaling, Status Games, Fraud and often Very Little Information

Many published papers have methodical or statistical errors, are derivative and don’t add anything to the discourse, are misleading or obfuscated, sometimes even fraudulent or were just bad research to begin with. Papers are first and foremost career instruments.

A naive system will weigh the insights extracted from a useless publication equally to a seminal work. You can correct for that by normalizing on citations and other tricks but that will just mimic and propagate existing biases and issues with current scientific publishing.

For example, if I design a system that mimics the practices of an expert reader, it would result in biases towards institutions, countries, the spelling of names, clout of authors and so on. That’s either unfair, biased and unequal or it is efficient, resourceful and a reasonable response based on the reader’s priors. In the end, there is no technological solution.

To push this point: if you believe in the great man theory of scientific progress, which has more merit than most want to admit, then why waste time making the other 99%+ of “unimportant” publications more searchable and discoverable? The “greats” (of your niche) will show up in your feed anyway, right? Shouldn’t you just follow the best institutions and ~50 top individuals and be done with your research feed?

Well in fact that’s what most people do.

Non-technical Life Science Labor Is Cheap

Why purchase access to a 3rd party AI reading engine or a knowledge graph when you can just hire hundreds of postdocs in Hyderabad to parse papers into JSON? (at a $6,000 yearly salary)

Would you invest in automation if you have billions of disposable income and access to cheap labor? After talking with employees of huge companies like GSK, AZ and Medscape the answer is a clear no.

Life science grads work for cheap, even at the post-grad level. In the UK a good bioinformatician can make 2-4 times of what non-technical lab technicians or early career biologists make. In the US the gap is even larger. The Oxford Chemistry and Biology postdocs I met during bus rides to the science park (from my time at Oxford Nanopore) earned £35k at AstraZeneca 3. That’s half of what someone slightly competent earns after four months of youtubing Javascript tutorials 🤷♂️.

When we gave our pilot customers (biologists) spreadsheets with relations, mentions and paper recommendations that fit their exact requirements they were quite happy and said it saved them many hours per week. But it wasn’t a burning pain for them since their hourly wage is low either way.

I suspect the inconsequential costs of lab labor is a reason why computer aided biology (CAB) and lab automation, including cloud labs, are slow on the uptake …it can’t just be the clogging of liquid handling robots, right?

The Literature is Implicitly Reified in Public Structured Knowledge Bases

There are a ton of biomedical knowledge bases, databases, ontologies that are updated regularly 4. They are high signal because groups of experts curate and denoise them.

They’re tremendously useful and will eventually make biomedical intelligent systems and reasoners easier to build. Bayer, AZ, GSK all integrate them into their production knowledge graphs and their existence makes any additional commercial attempts to extract relations from the literature less needed.

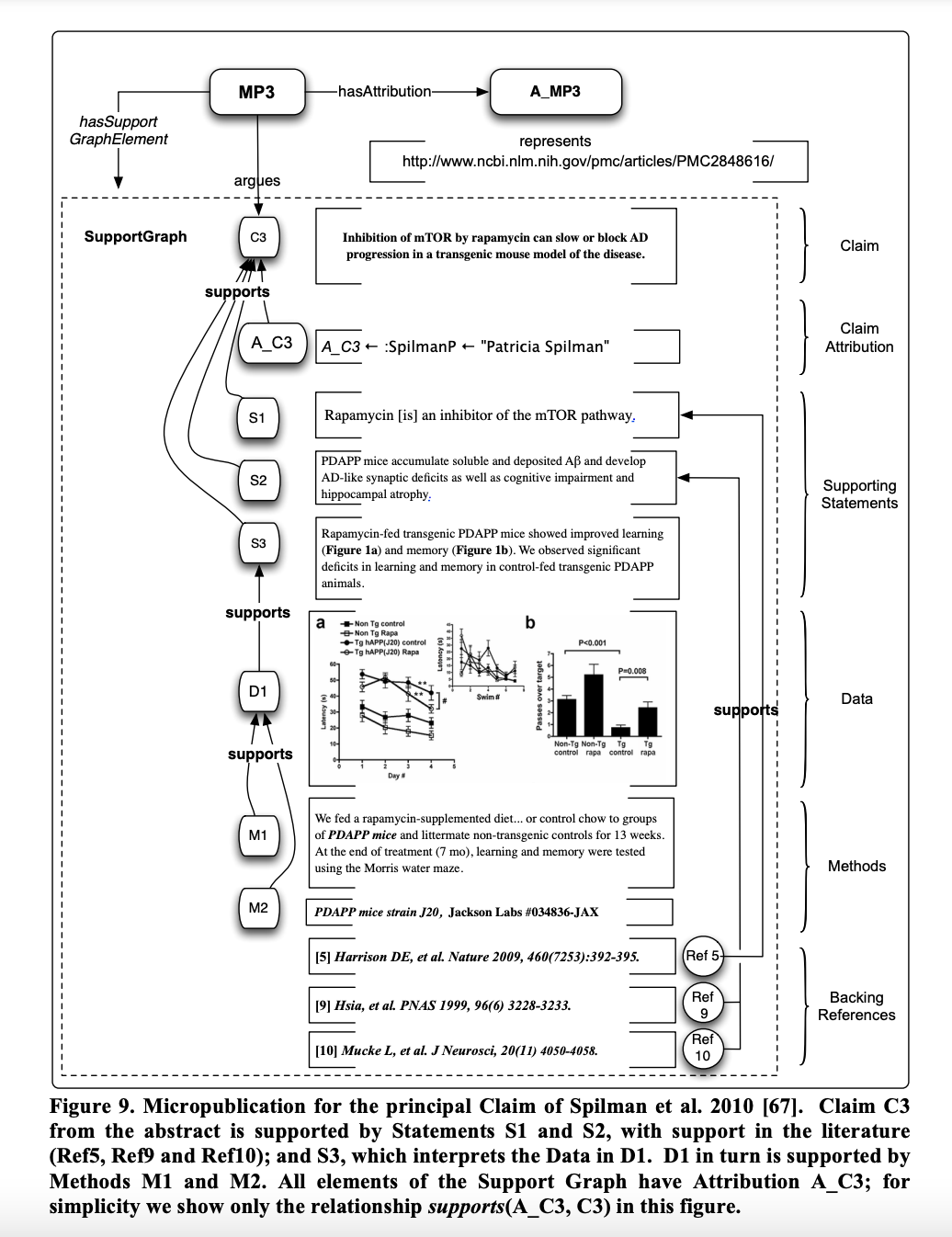

Advanced Interactives and Visualizations are Surprisingly Unsatisfying to Consume

As an artist this was the most painful lesson: Interactive graphs, trees, cluster visualizations, dendrograms, causal diagrams and what have you are much less satisfying than just lists, and most often lists will do.

On the other hand figures, plots and tables are at least as valuable as the actual text content of a paper but programs can’t intelligibly process and extract their contents (too much implied context).

That alone cuts the value of tools based on extracting insights from text in half (even with perfect extraction).

I realized that when I sat next to a pro “reading” a paper. It goes like this:

Scan abstract. Read last sentence of introduction. Scan figures. Check results that discuss the figures…

which was entirely different from my non-expert approach to reading papers. It makes sense: If you don’t have a strong domain model, you have no map that guides your scan, so you have to read it all top to bottom … like a computer.

This skip-and-scan selective processing also explains why agglomerative, auto-generated and compiled visualizations that incorporate a large corpus of papers are not that valuable: most of the sources would’ve been discarded up front.

Research contexts are so multiplicitous that every compiled report or visualization has huge amounts of noise and no user interface ever can be expressive enough to perfectly parameterize that context.

Unlike other Business Software, Domain Knowledge Bases of Research Companies are Maximally Idiosyncratic

Unlike other SaaS products that have standardized components (auth, storage, messaging, …), research knowledge graphs are always designed around the domain and approach of a company and are tightly integrated with their infrastructure, proprietary datasets and IP.

Two enterprises can encode the same corpus and will produce starkly different knowledge base artifacts.

Pharmas all have their own custom knowledge graphs and have entire engineering teams working full time on keeping schemas consistent (AstraZeneca and their setup).

Incompatibilities are ontological, not technological. Knowledge representations are design decisions. That’s why 3rd party knowledge graph vendors almost always have to do multi-month ongoing integration work and software consulting to make the sale. ”Request a demo” often translates to ”hire us for a bespoke data science project“.

To put knowledge base interoperability issues into perspective: even small database merges within the same company are still a huge problem in the software industry with no real, verifiable loss-free solution yet. Database incompatibilities have left many database administrators traumatized and knowledge bases have orders of magnitude higher schematic complexity than databases.

Divergent Tasks are Hard to Evaluate and Reason About

By “divergent” I mean loosely defined tasks where it’s unclear when they’re done. That includes “mapping a domain”, “gathering evidence”, “due diligence” and generally anything without a clear outcome like “book a hotel”, “find a barber”, “run this assay”, “order new vials”…

It’s easy to reason about convergent tasks, like getting an answer to a factual question or checking a stock price. It’s hard to reason and quantify a divergent discovery process, like getting a map of a field or exploring a space of questions. You can’t put a price tag on exploration and so it is set very low by default. Companies employ “researchers” not for reading literature, but for lab or coding work and the prestige of the “researcher” title pushes salaries down even further.

For example, take a product that offers corpus analytics:

What’s the value of getting a topic hierarchy, causal diagram or paper recommendations? It has some value if the user does not already have an operational model in his head but how often is that the case? Most biotech research jobs require a PhD level schema from the get go, so what’s the added value to a noisy AI-generated one?

When the components (claims, descriptions, papers) and tasks (evidence review, due diligence, mapping) are ambiguous it is tough to reason about returns on investment with clients. This continues inside the company: employees can defend hours in the lab much better than hours “researching”.

We’re also naturally good at diverging and auto-association which makes an in silico version of that feature less valuable. It often ends up being more effort to parse what those interfaces return than to actually, simply think.

Besides, most biotechs (before Series C) don’t have the infrastructure to try out new ideas faster than they can read them. A week in the lab can save you a day in the library 6

Public Penance: My Mistakes, Biases and Self-Deceptions

Yes, I was raised catholic. How did you know?

My biggest mistake was that I didn’t have experience as a biotech researcher or postdoc working in a lab. Sometimes being an outsider is an advantage, but in a field full of smart, creative people the majority of remaining inefficiencies are likely to come from incentives, politics and culture and not bad tooling.

I used to be friends with someone I consider exceptional who went on to found a biomedical cause-effect search engine (Yiannis the Co-founder of Causaly). It biased me towards thinking more about this category of products. Also, I had met employees at SciBite, a semantics company that was acquired by Elsevier for £65M, and was so unimpressed by the talent there that I was convinced that there’s lots left to do.

I wanted to make this my thing, my speciality. I had all the skills for making augmented interfaces for scientific text: machine learning, UI design and fine arts, frontend development, text mining and knowledge representation…

New intuitive query languages, no-code automation tools, new UIs for navigating huge corpi, automated reporting, cluster maps etc. where among the few applications that fit my aesthetics enough to withstand the disgusts of developing production software.

I had a good idea of the business model issues after a few weeks of talking to users, but I didn’t want to stop without a first-principles explanation of why that is. Sunk-cost fallacy and a feeling of fiduciary responsibility to Emergent Ventures played a part too, but mainly because I wanted to know decisively why semantic structuring, academic search, discovery and publishing are such low-innovation zones. Looking back, I should’ve been OK with 80% certainty that it’s a lost cause and moved on.

I wanted to work on these tools because I could work with scientists and contribute something meaningful without needing to start from scratch teaching myself biology, bioinformatics and actually working in a lab. I started from nothing too many times in my life and had gotten impatient. I wanted to leverage what I already knew. But there aren’t real shortcuts and impatience made me climb a local optima.

Onwards

The initial not so modest proposal I sent to Emergent Ventures was along the lines of:

a next-generation scientific search and discovery web interface that can answer complex quantitative questions, built on extracted entities and relations from scientific text, such as causations, effects, biomarkers, quantities, methods and so on

I mentioned that

- there were major advances in relevant fields of machine learning

- that current interfaces are impoverished

- that an hour of searching could be collapsed into a minute in many cases

All of that is still true but for the reasons I tried to share in this essay nothing of it matters.

Close to nothing of what makes science actually work is published as text on the web.

Research questions that can be answered logically through just reading papers and connecting the dots don’t require a biotech corp to be formed around them. There’s much less logic and deduction happening than you’d expect in a scientific discipline 5.

It’s obvious in retrospect and I likely persisted for too long. I had idealistic notions of how scientific search and knowledge synthesis “should work”.

I’ve been flirting with this entire cluster of ideas including open source web annotation, semantic search and semantic web, public knowledge graphs, nano-publications, knowledge maps, interoperable protocols and structured data, serendipitous discovery apps, knowledge organization, communal sense making and academic literature/publishing toolchains for a few years on and off … nothing of it will go anywhere.

Don’t take that as a challenge. Take it as a red flag and run. Run towards better problems.